Don't Let Visitors Know Your Origin Server Exists

For the best performance, there’s no good reason content-focused sites should ever serve an uncached page to a visitor. With modern caching directives and other approaches on the table, it’s easier than ever to preventing visitors from knowing your origin exists at all.In its heyday, I was entranced by the Jamstack – particularly by its promise of performance. I knew old-school HTTP caching existed, but I relegated it to being an unnecessarily hard thing boomers cared about. I had moved beyond, believing that a statically generated site is inherently more performant than a dynamic one. No exceptions.

That naiveté waned as smart people started sharing more about the fundamentals of HTTP caching, and why there's nothing technical holding back a "traditional" site from being just as fast as a static one.

The scales started to fall. I started to realize that what matters more to performance than the architecture you choose are the principles you build around.

As it relates to the performance of content-heavy sites (blogs, marketing sites, etc.), the principle is that your users should never need to request anything directly from your origin server. They should have no reason to believe it exists, interacting with nothing more than some form of a middleman, like a CDN. Fortunately, this is getting easier to pull off, no matter how your site is architected.

Typical CDN Caching: Good, not Great

The most common way sites leverage CDN caching is fine, but it's insufficient for fully guarding your origin from needing to build a fresh response.

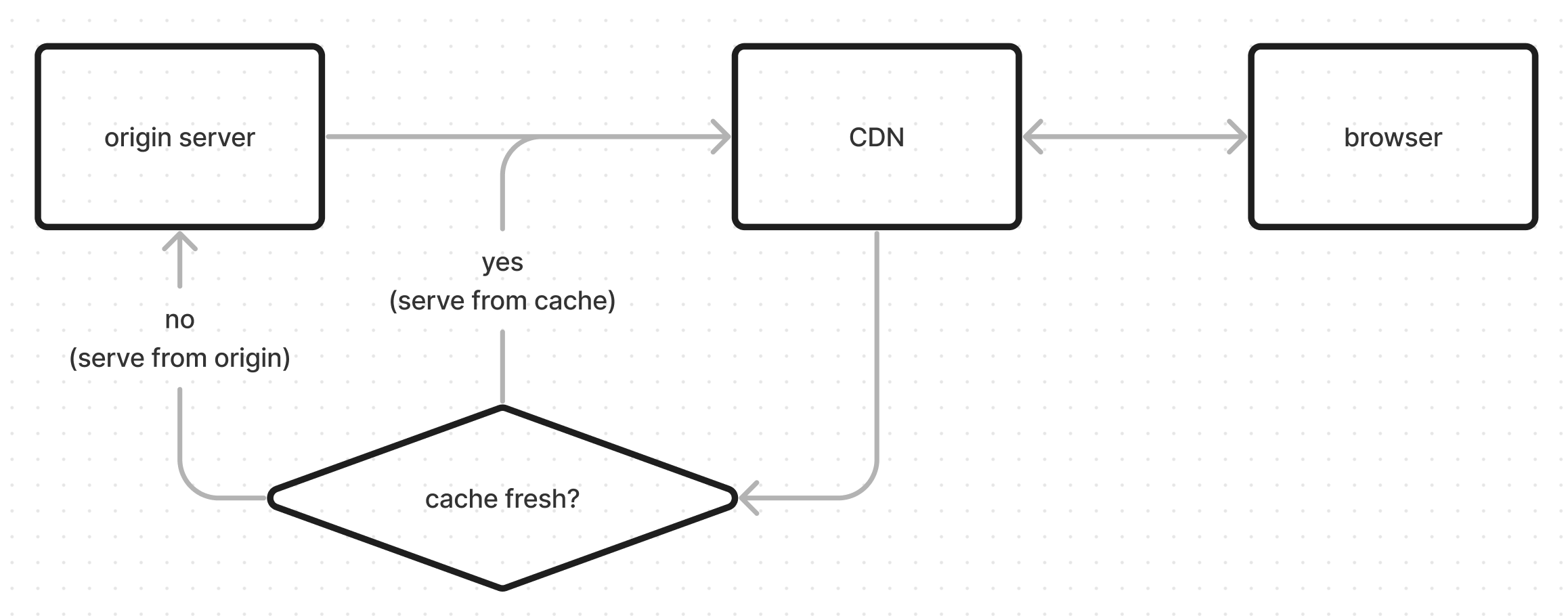

Here's a typical setup. A CDN acts as a reverse proxy between a visitor's browser and the server. When a request comes in, the source of the response depends on whether it's already cached and considered "fresh." If it is, the origin server won't be touched. If not, it'll process a new response and use it to both serve the user and replenish the cache.

Many servers set a "Cache-Control" header crudely similar to this, and CDNs will usually respect that header.

Cache-Control: public, max-age=3600In this case, assets will be considered "fresh" in the cache for an hour. After that, it'll head back to origin for a new response. It's a simple pattern to wrap your head around, and good for enforcing strict cache windows, but it also has some inherent challenges.

You sacrifice the occasional user.

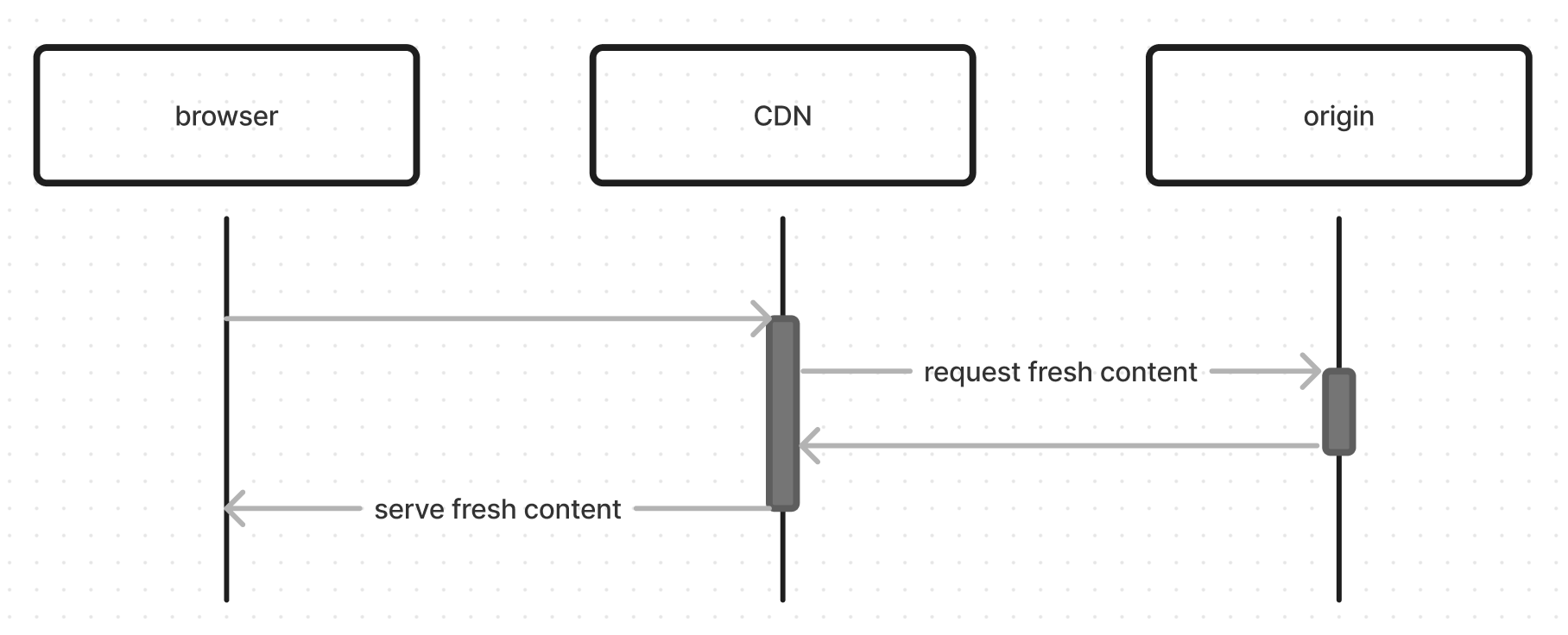

With a caching strategy like this, the CDN is like an apathetic high school student: lazy and reactive. Instead of preemptively updating the cache on its own, it cares about content staleness only when a request is received. And then, if it's expired (after 3,601 or more seconds, based on the example above), the visitor pays the price. Here's a diagram of that "stale cache" scenario.

That unfortunate visitor needs to wait for a fully synchronous trip all the way back to the origin server. It won't impact a lot of people, and the cost will depend on the performance of your server. But still, it sacrifices the experience of the occasional visitor by design. And if you really pride yourself on consistent, performant experiences, it shouldn't sit with you well.

CDNs are unreliable friends.

I remember feeing a little disappointed when I realized this: telling a CDN to cache your assets with a certain "Cache-Control" header does not guarantee they'll actually respect it. Take, for example the following header:

Cache-Control: public, max-age: 3600That's not an imperative for a CDN to cache your asset for an hour. It's more of a plea for them to cache it that long if they're willing. There's no obligation for them to respect that timeframe, and depending on your traffic and overall priority, your assets may be purged without notice (a practice referred to as "cache eviction"). I like how Sean Roberts puts it: "it’s a giant popularity contest inside the cache store." And it's not really a secret either. Cloudflare's been open about it, for example:

If cache storage in a certain region is full, our network avoids imposing these inefficiencies on our customers by evicting less-popular content from the data center and replacing it with more-requested content.

I felt this pain while attempting to move from a static site to a dynamic one with good cache headers. I was using Cloudflare's free tier and I also wasn't getting a lot of traffic. As a result, I frequently needed to wait for fresh requests to resolve due to surprise cache evictions. It was frustrating, and one of the reasons I opted to keep using a fully static approach.

Some Good Options for Guarding Your Origin

With these difficulties in mind, there are still some good ways to prevent your origin server from ever directly serving a fresh request to a user. And in my opinion, they're becoming so accessible that it's hard to justify not using one of them for maximum performance.

Statically Generate Your Site

It's obvious, but still a great option. With all of your site's content being generated in advance, you never need to worry about a slow request/response cycle punishing a visitor or threatening your time-to-first-byte. Your "origin" is whatever machine runs your build command on each deployment. After that, it's out of the picture.

CDN cache eviction may still happen, but when it does, it just means serving ready-to-go files from a file system. These "cold" responses might not be globally distributed, but they're still measurably more performant. It's no surprise there are so many resources out there on hosting static sites directly from an S3 bucket. I even use Cloudflare's R2 product to served uncached image requests with PicPerf. Trust me. It's fast.

It's worth mentioning that this option doesn't come without its challenges either. The most common one is ensuring that when you update your content, both your generated content and any CDN in front of it gets purged at the same time. If not, there'll be some annoying inconsistencies between the source of your content (a CMS), your static files, and whatever a CDN is still serving to your visitors. Thankfully, if you're using a platform like Vercel or Netlify, much of that hassle is abstracted away (although, there's probably still room for improvement).

Use stale-while-revalidate w/ a Persistent Backup Cache

If you're using a more orthodox framework to serve content, there are some highly useful tools to make origin protection a lot easier and more reliable.

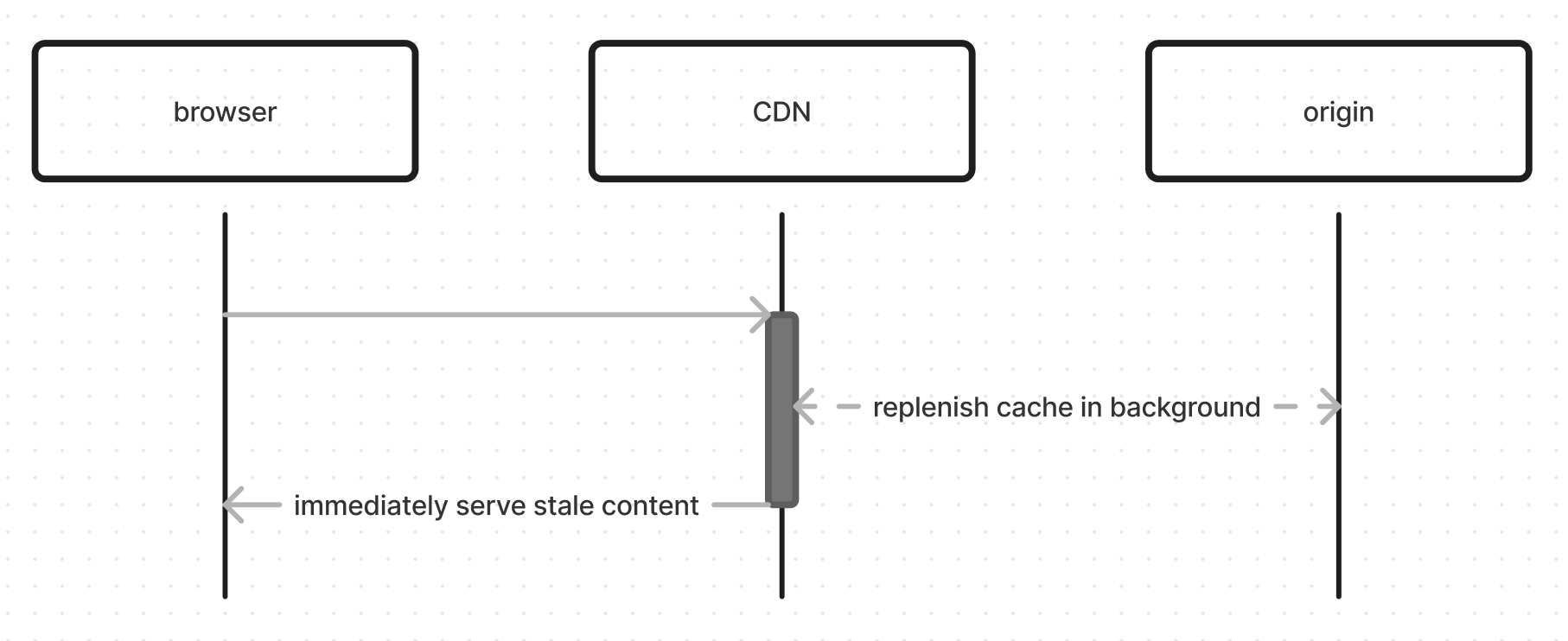

The stale-while-revalidate cache directive is a part of that. It allows you to set a max-age like usual, but you can also give the CDN a timeframe in which the stale version is be served while a fresh version is regenerated & cached in the background. Consider this header:

Cache-Control: max-age=300, stale-while-revalidate=604800With this in place, the origin server tells the CDN that the contents will go stale after only five minutes. But this doesn't mean the next user will need to wait for a request back to origin. If a request comes in for the next week after that (604,800 seconds), the stale contents will be served from the cache, all while it's made "fresh" for the next user. Our diagram ends up looking more like this:

It's a great end result: as long as there's any request that comes in within that week, every user will get a cached response, even as content continues to change.

Depending on your needs, you can beef this up too. Let's say you have content you update very frequently, but you still want to prevent your origin from serving a visitor directly, and you're a little flexible in how "strict" those updates are propogated. You could use the stale-while-revalidate directive to make the contents stale after only a minute, but be willing to serve them for up to a year if another request comes in (and thereby updating the cache for the next user):

Cache-Control: max-age=60, stale-while-revalidate=

31536000With a strategy like this, you're effectively serving dynamic content at static speeds. Of course, much of the value here is lost in the event of a cache purge. And that's why I personally wouldn't reach for SWR in combination with a plain, ol' CDN. Thankfully, though, that's becoming more solvable as providers offer more permanent, persistent caching products.

One of them is Cloudflare's Cache Reserve. When it's enabled, all of your cacheable content will be stored in R2 bucket as a sort of backup, persistent cache, and it'll be tapped whenever the first-class CDN cache comes up dry. The default TTL for this content is 30 days, but from my understanding (and suggested by their own documentation, you're able to "as long as you want," making it a great companion for long-tailed, aggressive cache headers.

Also worth mentioning is Bunny.net's Perma-Cache, which touts a very similar model to Cache Reserve. Cacheable contents are stored in storage buckets replicated across a number of locations, and when the primary CDN has been purged, those assets are accessed. As far as I can tell, Bunny doesn't support the standard stale-while-revalidate directive, but they do offer a proprietary version of the same functionality: "Stale Cache Delivery."

Regardless of the route you go, it's encouraging to see more options out there for combining the the model of serving stale content while revalidated occurs in the background with long-term caching not at risk of unexpected eviction.

Cache Static HTML on Your Origin

If you don't want to rely so much on third parties to store your cached content remotely, there's another option on the table – locally storing static HTML on origin itself.

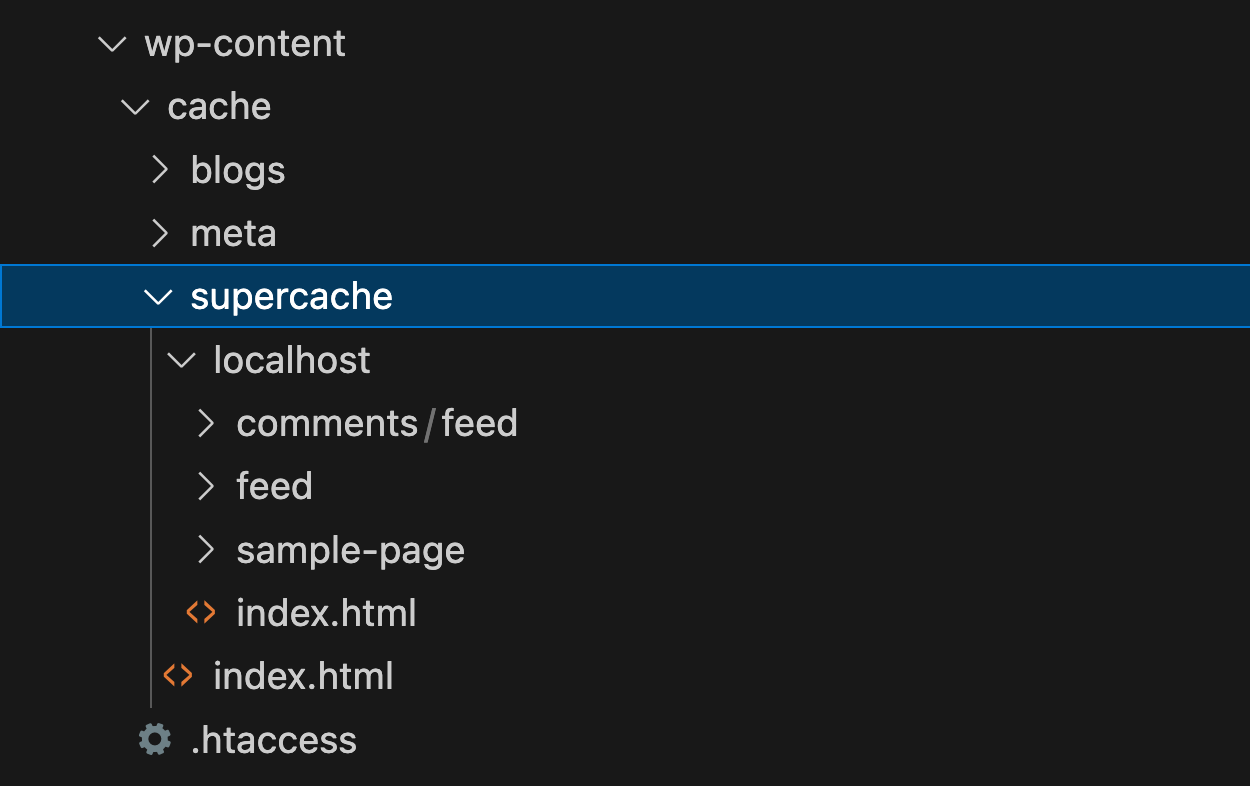

This approach is fairly common in the WordPress space. Caching plugin's like WP Super Cache will store static HTML in a particular directory and serve it when a request comes in. You'll end up seeing something like this your WordPress directory tree:

Those static files aren't globally distributed like a CDN would do, but it greatly reduces the duration of the request because the server isn't required to build the content from scratch.

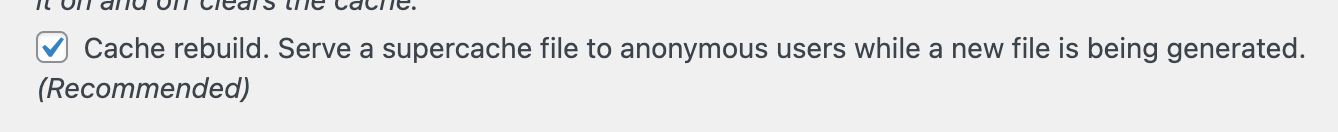

The stale-while-revalidate model is also employed by many of these plugins, albeit under a different label. WP Super Cache, for example, refers to it as "cache rebuild." When it's enabled, stale content will be served immediately, while fresh content is generated in the background:

That's highly WordPress-specific, but the approach could be leveraged in any sort of application if needed. The point is that you're yet again shielding your origin server from directly serving a request from scratch.

Uphold the Principle, but Don't Go Crazy

These are just a few good methods for effective origin shielding. There are a million more you could go nuts with, but at some point, you'll need to weigh the complexity against the potential benefits. For example, I can see someone obsessed with edge functions (me) setting up a Redis instance with entries caching every the markup of every page. "Cool", but possibly not worth the squeeze when there are more boring but effective options on the table.

Like I said: what's more important is adhering to the principle. Whether it's an SSG, Laravel application, or an Express server running on a Raspberry Pi in your garage, don't allow your user to ever bear the burden of a full request to origin. Give 'em no reason to believe it even exists.

Get blog posts like this in your inbox.

May be irregular. Unsubscribe whenever.