I’ve Boarded the SSG Train Again

If you haven’t noticed, we’re in a bit of a server-rendered resurgence right now. It seems like the heyday of the Jamstack, and more specifically, static site generation (SSG) is behind us, and the pendulum is once again swinging toward more traditional paradigms. Namely: HTML that’s generated on demand/request rather than in advance.

Despite being somewhat of a Jamstack fanatic the past few years, I’m not mad about the shift. When it began picking up steam, SSG was pitched as a panacea for our biggest web development problems. It claimed to be superior in terms of performance, cost, and complexity, when it was really just a different tactic to meet these challenges.

Different, Not Superior

Consider performance. With a good CDN & caching strategy, there’s nothing technical preventing a server-rendered site from being just as performant as a statically generated one. Modern caching policies like “stale-while-revalidate” can even prevent a user from ever hitting an uncached response, and are being supported by an increasing number of CDN providers. Sure, SSG has the advantage of being statically served by default, but at the end of the day, the <div>s are still shipped quickly & close to the user, regardless of when they’re generated.

Or, take complexity. SSG hype insisted its architecture inherently makes for simpler sites, since there’s no need to maintain a running server, database, and all the other infrastructure required to keep a site from folding under large amounts of traffic. In fact, Gatsby’s documentation still makes that claim today. But that complexity hasn’t really gone anywhere — it’s just shifted. None of these components are whisked away when you move to an SSG. You’ll still need a database (or some other data source), infrastructure to build your code, and a web server to deliver it. The only difference is when most of it is used: on build or on request.

The Understated Trade-Offs

On top of that, this hype has often downplayed the inherent challenges of an SSG approach, with the most obvious one being the inability to serve dynamic content. There are, of course, ways to tackle this too, but they just lead to another layer of trade-offs.

For example, we could serve a static site and then fetch & render dynamic content on the client. But that means shipping more JavaScript, impacting metrics like first input delay and increasing the number of bytes users needs to download, parse, & execute before they can even access any of your content. At best, the shell of your site will load quickly, but seeing any meaningful content may still be a long way off. Plus, a site depending on JavaScript to render will inevitably run into SEO difficulties.

Build time is another big one. Depending on the generator, the amount of content on your site, and several other factors, committing to an SSG means being ready to wait for the entire site to build in order to update or publish anything. Platforms like Vercel and Gatsby (probably others now too) have skirted this by introducing incremental generation features, but it isn’t the norm across all platforms, and certainly not a table stakes feature for any given SSG (yet). And for that reason, making such a move requires you to ask, at minimum: “Do I have the sort of site that can afford to wait for a build to complete before my content will be available?”

There’s much more I could belabor here. The point is that SSGs haven’t become the WD-40 of our problems, and I think most people are starting to more clearly see that (including myself).

An Itch to Try Something New

My own site’s been built with a few different SSGs over time, including Jekyll, Gatsby, and Next.js. I’ve had neither complex needs nor a ton of content, and so the paradigm’s been a great fit. But a few months ago, when that vibe started to shift and I was more commonly pondering all of the aforementioned thoughts, I began to wonder if I should take a stab at building the next iteration with a server-rendered framework. At the time, Remix was relatively new, open-sourced, and had a ton of Twitter energy around it. I bit and committed to using it for my site.

My Priorities

I went into the rebuild with a few priorities in mind:

Performance/Caching

I take pride in how fast a site I’ve built loads when it’s hosted on SSG-friendly platforms like Vercel and Netlify, and so this was a cold, hard requirement for me. If I was gonna switch, I’d need to have SSG-like performance, preferably using stale-while-revalidate on Cloudflare (the number of CDNs that support this policy is still depressingly low, but thankfully, Cloudflare’s incredible and I had already been using them for other things). And this caching couldn’t be flaky. I wanted almost no cache misses after first request, as well as the confidence it was actually happening.

Content Management

Ever since I’ve been blogging, I’ve used Markdown files to store my content, all stuck directly in my repository. I’ve appreciated how simple it is, as well as the fact that I don’t need to worry about a dedicated CMS as a dependency. I also really like writing in Markdown.

But over time, the clunkiness of publishing a new post became a little too annoying. I’d draft my posts in Notion (a really nice writing experience), and when I was ready, I’d perform a full export into a Markdown file. Finally, I’d stick it and any images into my repository. The number of steps it took to launch a new post became a hindrance to me publishing anything at all. If I were to rebuild, I wanted to give a big upgrade to my writing experience along the way.

Cost

Platforms like Vercel and Netlify are too good to us. They make it disgustingly easy to stand up a high-performance site with a ton of serverless bells & whistles for free (they both have very generous free tiers). I didn’t want to introduce another bill into my technological ecosystem if I didn’t have to (subscription fatigue is real), so if I were to move to something else, I wanted to find some reputable platform with a similar model. Cheap, but good.

The Rebuild Experience

With these priorities in mind, it wasn’t hard to nail down the rest of the stack I’d use for my new Remix site:

Hosting: Fly.io

I’d been hearing really good things about Fly from the likes of Kent C. Dodds and other big names in the space for quite a while, and so I had a pretty decent bank of trust built up with them, even though I had never used them. Plus, they let you deploy two apps for free, satisfying that “cost” priority.

Caching: Cloudflare

As mentioned, I had been happily using Cloudflare for quite some time, and it’s one of the few CDN providers I’ve come across that supports staile-while-revalidate. I also wanted to reserve the ability to use their Workers product if it became helpful for whatever reason (and it did, but that’s a post for another time).

CMS: Notion

As mentioned, I’d been using Notion to draft my posts for a while. Aside from the nice writing experience, it supports Markdown, including code blocks for several different languages. The timing also felt right being that their official API had recently been opened up, and supported by official SDKs. I didn’t know what to expect from a performance standpoint, but I was hoping my caching strategy would prevent any issues from being experienced by my visitors.

Where Things Got Hard

Overall, I really enjoyed building out the site. Remix offers a pretty good developer experience, is well-documented, and I enjoyed how it leans into web standards. There were, however, a couple of things that started to make me wonder if it was worth finishing the effort.

Deployment

Getting my site stood up on Fly.io wasn’t monumentally difficult, but there was some notable friction. Most of my issues on that front involved tweaking my Docker image after ripping out a ton of stuff provided to me by the Indie Stack I chose. It’s very possible I could’ve gone another route (maybe no stack at all) and had a smoother experience.

But the other pain point here was getting it wired up to automatically deploy when I pushed a change to GitHub. The stack I chose came with a workflow, but it didn’t “just work,” which is something I got quite accustomed to when working with Vercel and Netlify. I realize these aren’t apples-to-apples comparisons here. Remix is a very different type of application compared to what I had been using. But still, being spoiled for so long with my SSG sites made it less than fun to wade into something else.

Caching

I mentioned that caching was my top priority in rebuilding my site. I went into the migration pretty optimistic about meeting that priority. Setting up Cloudflare was easy, as expected, as was configuring the headers on my site, which looked like this:

// Consider stale after 1 hour, but serve stale & refresh cache for 6 months.

responseHeaders.set(

"Cache-Control",

"public, max-age=3600, s-maxage=3600, stale-while-revalidate=15780000"

);

Based on that header, I expected my content be served straight from the CDN for up to one hour. After that, it would go “stale,” but Cloudflare would still serve that stale cache for up to six months later. And whenever it did serve that stale content, it would refresh the cache behind the scenes. So, as long as people keep visiting a page at least once every six months, no one would ever experience a slow, uncached response.

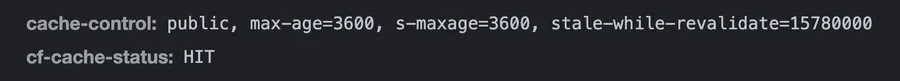

But that’s not what happened. I started inspecting the response headers shortly after I deployed these changes. It would return a “HIT,” meaning the page was successfully served from Cloudflare’s cache:

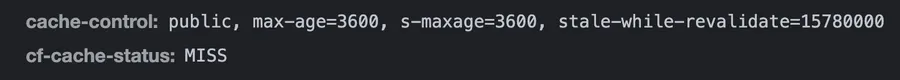

But then, shortly later (if I remember correctly, it was maybe just an hour or so), refreshing that page would result in a “MISS”:

It got pretty frustrating as it continued to occur, but things became a little clearer after Googling around. As it turns out, Cloudflare and other CDNs make no guarantee as to how long they will actually cache a resource. Those time values I used in my headers were really just the maximum amount of time Cloudflare is permitted do it. No promises are made beyond that.

It’s hard to find any official documentation on all of this, but it appears that Cloudflare will commonly use this distinction to purge infrequently accessed resources, making it a bigger concern for smaller, personal sites like my own.

Sifting more, it apparently isn’t a new thing for Cloudflare, and may very well be tied to how differs itself as a full-on reverse proxy, rather than strictly a static file host. It did cross my mind that it might behave differently for paid plans, but I didn’t do enough digging to figure out if it does.

As I was piecing this together from various personal experiences and forums on the internet, it slowly became clear I wouldn’t have the level of confidence in caching I wanted. Instead, I’d need to explore other CDN offerings that explicitly support more permanent, longer-term caching, or else risk several requests needing to wait for a full response from Notion before showing any content.

Of the alternatives I came across, Bunny seemed the most interesting. Not only do they clearly tout permanent caching (“Perma-Cache,” as they call it), they also appear to support an off-brand version of stale-while-revalidate called “Stale Cache Delivery.” The main “downside” here is that there isn’t a free tier, and I’m also not that interested in diving into a totally new CDN right now. Not to mention, I’m sure there are other trade-offs I’d need to spend time considering as well (ex: Cloudflare has over double the number of POPs that Bunny does).

In Fairness, SSG Caching Ain’t Perfect

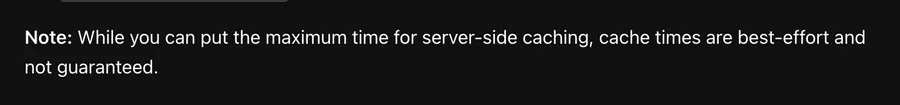

It’s really important to note that these caching issues don’t just go away by using Vercel or Netlify. Even for them, cache times are never guaranteed to reach the maximum max-age header you set. For example, Vercel claims assets are cached for up to 31 days, by default. But also includes this disclaimer:

The nice thing, though, is that because the site is statically generated, a fresh request doesn’t need to wait for data to be fetched before the HTML can even be built. Instead, the server just goes back to origin to pick up ready-made HTML files, making those “cold” requests pretty lukewarm.

Platform FOMO

Embarking on this journey really made me appreciate some of the things Jamstack-friendly platforms have turned into features we might take for granted. CI/CD is a breeze, leaving very little friction between getting something off your machine and deployed. CDN caching and SSL certificates just work without you thinking about it. It’s very easy to spin up a branch of your site to a unique, shareable URL. Minimal-config serverless functions provide a means of performing tasks requiring a server (I’ve processed TypeIt license purchases on a Netlify function for years).

And then there are newer features that begin to blur the traditional line between static and server-rendered sites. A while back, Vercel rolled out incremental static regeneration, giving your site a proprietary stale-while-revalidate experience without dealing with a CDN (I’m using this on my personal dashboard and it works great). Another example is edge functions offered by both Netlify and Vercel, making it possible to run dynamic, server-side logic real close to your users, rather than only at your origin.

These and other players are pushing the limit hard and fast, and it makes me not want to miss out on the next interesting thing they’ll roll out.

We’ll See Where this Goes

I was a little bummed about the time I invested in that Remix rebuild that will probably never see the light of day, but in hindsight, I’m grateful to have learned a ton in the process, especially with regard to caching and CDNs. I’m also left with a fresh sense of excitement for the Jamstack and some of the innovations its helped shepherd in.

Even so, it’s only a matter of time before I feel the itch to tear all of this down and rebuild it with the next big thing. I’m giving myself six months.

Get blog posts like this in your inbox.

May be irregular. Unsubscribe whenever.