Trevor

How could this work - for free?

We use a lot of plain, old objects to store key/value data in JavaScript, and they're great at their job – clear and legible:

const person = {

firstName: 'Alex',

lastName: 'MacArthur',

isACommunist: false

};But when you start dealing with larger entities whose properties are frequently being read, changed, and added, it's becoming more common to see people reach for Maps instead. And for good reason: in certain situations, there are multiple advantages a Map has over an object, particularly those in which there are sensitive performance concerns or where the order of insertion really matters.

But as of late, I've realized what I especially like to use them for: handling large sets of DOM nodes.

This thought came up while reading a recent blog post from Caleb Porzio. In it, he's working with a contrived example of a table composed of 10,000 table rows, one of which can be "active." To manage state as different rows are selected, an object is used as a key/value store. Here's an annotated version of one of his iterations. I also added semicolons because I'm not a barbarian.

import { ref, watchEffect } from 'vue';

let rowStates = {};

let activeRow;

document.querySelectorAll('tr').forEach((row) => {

// Set row state.

rowStates[row.id] = ref(false);

row.addEventListener('click', () => {

// Update row state.

if (activeRow) rowStates[activeRow].value = false;

activeRow = row.id;

rowStates[row.id].value = true;

});

watchEffect(() => {

// Read row state.

if (rowStates[row.id].value) {

row.classList.add('active');

} else {

row.classList.remove('active');

}

});

});That does the job just fine (and it had nothing to do with the post's point, so zero shade is being thrown here). But! It uses an object as a large hash-map-like table, so the keys used to associate values must be a string, thereby requiring a unique ID (or other string value) exist on each item. That carries with it a bit of added programmatic overhead to both generate and read those values when they're needed.

Instead, a Map would allow us to use the HTML nodes as keys themselves. So, that snippet ends up looking like this:

import { ref, watchEffect } from 'vue';

- let rowStates = {};

+ let rowStates = new Map();

let activeRow;

document.querySelectorAll('tr').forEach((row) => {

- rowStates[row.id] = ref(false);

+ rowStates.set(row, ref(false));

row.addEventListener('click', () => {

- if (activeRow) rowStates[activeRow].value = false;

+ if (activeRow) rowStates.get(activeRow).value = false;

activeRow = row;

- rowStates[row.id].value = true;

+ rowStates.get(activeRow).value = true;

});

watchEffect(() => {

- if (rowStates[row.id].value) {

+ if (rowStates.get(row).value) {

row.classList.add('active');

} else {

row.classList.remove('active');

}

});

});

The most obvious benefit here is that I don't need to worry about unique IDs existing on each row. The node references themselves – inherently unique – serve as the keys. Because of this, neither setting nor reading any attribute is necessary. It's simpler and more resilient.

I've italicized "generally" because, in most cases, the difference is negligible. But when you're working with larger data sets, the operations are notably more performant. It's even in the specification – Maps must be built in a way that preserves performance as the number of items continues to grow:

Maps must be implemented using either hash tables or other mechanisms that, on average, provide access times that are sublinear on the number of elements in the collection.

"Sublinear" just means that performance won't degrade at a proportionate rate to the size of the Map. So, even big Maps should remain fairly snappy.

But even on top of that, again, there's no need to mess with DOM attributes or performing a look-up by a string-like ID. Each key is itself a reference, which means we can skip a step or two.

I did some rudimentary performance testing to confirm all of this. First, sticking with Caleb's scenario, I generated 10,000 <tr> elements on a page:

const table = document.createElement('table');

document.body.append(table);

const count = 10_000;

for (let i = 0; i < count; i++) {

const item = document.createElement('tr');

item.id = i;

item.textContent = 'item';

table.append(item);

}Next, I set up a template for measuring how long it would take to loop over all of those rows and store some associated state in either an object or Map. I'm also running that same process inside a for loop a bunch of times, and then determining the average amount of time it took to write & read.

const rows = document.querySelectorAll('tr');

const times = [];

const testMap = new Map();

const testObj = {};

for (let i = 0; i < 1000; i++) {

const start = performance.now();

rows.forEach((row, index) => {

// Test Case #1

// testObj[row.id] = index;

// const result = testObj[row.id];

// Test Case #2

// testMap.set(row, index);

// const result = testMap.get(row);

});

times.push(performance.now() - start);

}

const average = times.reduce((acc, i) => acc + i, 0) / times.length;

console.log(average);I ran this test with a range different row sizes.

| 100 Items | 10,000 Items | 100,000 Items | |

|---|---|---|---|

| Object | 0.023ms | 3.45ms | 89.9ms |

| Map | 0.019ms | 2.1ms | 48.7ms |

| % Faster | 17% | 39% | 46% |

Keep in mind these results would likely vary quite a bit in even slightly different circumstances, but on the whole, they generally met my expectations. When dealing with relatively small numbers of items, performance between a Map and object was comparable. But as the number of items increased, the Map started to pull away. That sublinear change in performance started to shine.

There's a special version of the Map interface that's designed to steward memory a bit better – a WeakMap. It does so by holding a "weak" reference to its keys, so if any of those object-keys no longer have a reference bound to it elsewhere, it's eligible for garbage collection. So, when the key is no longer needed, the entire entry is automatically axed from the WeakMap, clearing up even more memory. It works for DOM nodes too.

To tinker with this, we'll be using the FinalizationRegistry, which triggers a callback whenever a reference you're watching has been garbage collected (I never expected to find something like this handy, lol). We'll start with a few list items:

<ul>

<li id="item1">first</li>

<li id="item2">second</li>

<li id="item3">third</li>

</ul>Next, we'll stick those items in a WeakMap, and register item2 to be watched by the registry. We'll remove it, and whenever it's been garbage collected, the callback will be triggered and we'll be able to see how the WeakMap has changed.

But... garbage collection is unpredictable and there's no official way to make it happen, so to encourage it, we'll periodically generate a bunch of objects and persist them in memory. Here's the entire script:

(async () => {

const listMap = new WeakMap();

// Stick each item in a WeakMap.

document.querySelectorAll('li').forEach((node) => {

listMap.set(node, node.id);

});

const registry = new FinalizationRegistry((heldValue) => {

// Garbage collection has happened!

console.log('After collection:', heldValue);

});

registry.register(document.getElementById('item2'), listMap);

console.log('Before collection:', listMap);

// Remove node, freeing up reference!

document.getElementById('item2').remove();

// Periodically create a bunch o' objects to trigger collection.

const objs = [];

while (true) {

for (let i = 0; i < 100; i++) {

objs.push(...new Array(100));

}

await new Promise((resolve) => setTimeout(resolve, 10));

}

})();

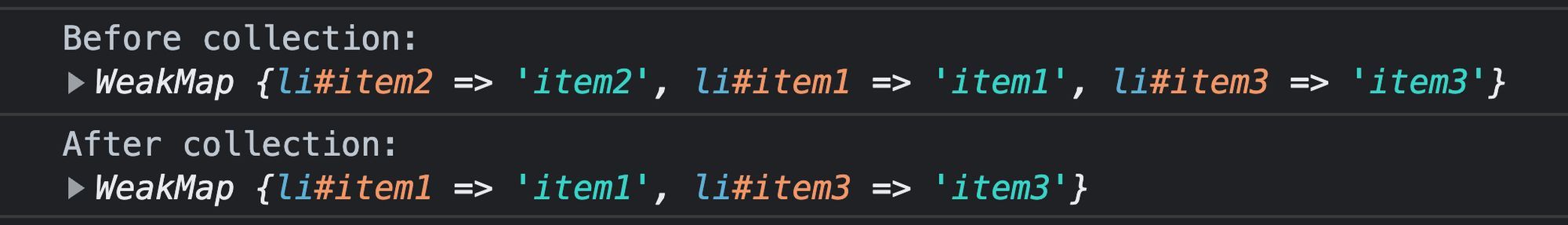

Before anything happens, the WeakMap holds three items, as expected. But after the second item is removed from the DOM and garbage collection occurs, it looks a little different:

Since the node reference no longer exists in the DOM, the entire entry was removed from the WeakMap, freeing up a smidge more memory. It's a feature I appreciate in helping to keep an environment's memory just a bit tidier.

I like using Maps for DOM nodes because:

WeakMap with nodes as keys means entries will be automatically garbage collected if a node is removed from the DOM.I'm interested in hearing of other interesting reasons to use "newish" objects like Map and Set in real-life scenarios. If you have 'em, share 'em!

Get blog posts like this in your inbox.

May be irregular. Unsubscribe whenever.How could this work - for free?

Heh? Not sure I follow.

love it

Happy to hear!

The post reminds me of this:

https://codeburst.io/interfaces-in-javascript-with-es6-naive-implementation-91b703110a09